float floor(vec3 ro, vec3 rd, vec3 normal, inout float t)

{

float d = dot(rd,-normal);

float n = dot(ro,-normal);

t = mix(-n/d,t,step(d,0.));

return d;

}

float sphere(vec3 ro, vec3 rd, float size, out float t0, out float t1, out vec3 normal)

{

float a = dot(rd,ro);

float b = dot(ro,ro);

float d = a*a - b + size*size;

if (d>=0.) {

float sd = sqrt(d);

float ta = -a + sd;

float tb = -a - sd;

t0 = min(ta,tb);

t1 = max(ta,tb);

normal = normalize(ro+t0*rd);

}

return d;

}

vec3 eye = vec3(0.,3.,10.);

vec3 screen = vec3(0.,3.,9.);

vec3 up = vec3(0.,1.,0.);

vec3 right = cross(up,normalize(screen - eye));

vec3 ro = screen - c.y*up + c.x*right;

vec3 rd = normalize(ro - eye);

float t = 10000.;

float d = floor(ro,rd,up,t);

float t0,t1;

vec3 sn;

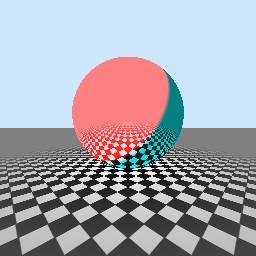

float ds = sphere(ro-vec3(0.,4.,0.),rd,4.,t0,t1,sn);

float b = step(t0,t);

t = mix(t,mix(t,t0,b),step(0.,ds));

float t2;

vec3 i=ro+rd*t;

vec3 rd2 = reflect(rd,sn);

float d2 = floor(i,rd2,up,t2);

float tr = mix(10000.,t2,step(0.,d2));

i=mix(i,i+rd2*tr,b*step(0.,ds));

float shade = 1.;

vec2 mxz = abs(fract(i.xz)*2.-1.);

float fade = clamp(1.-length(i.xz)*.07,0.,1.);

float fc = mix(.5,smoothstep(1.,.9,mxz.x+mxz.y),fade);

vec3 col = vec3(fc*shade);

vec3 lp = vec3(10.);

i=ro+rd*t;

col = mix(col,clamp(col+vec3(1.,0.,0.)*dot(sn,lp-i),0.,1.),step(0.,ds)*b);

col = mix(vec3(0.8,0.9,1.),col,clamp(step(0.,d)+step(0.,ds),0.,1.));

f = vec4(col,1.);

If you've been following me at all on twitter, you probably noticed I was spending some time recently playing with the great new shadertoy.com

The new shadertoy (not to be confused with the original one) is the latest and greatest WebGL-based online shader editor.

It takes the publishing features of the glsl sandbox, adds draft shader saving, updating of published shaders, and user profiles+commenting to create a more visible shader-developing community. Great work from all involved.

The first few articles on ThNdl were rooted firmly in basic 2D shader techniques. However, on shadertoy I've mainly been exploring 3D effects. A large number of the other shaders there are also 3D-based.

One reason for this is that WebGL lets you cut through the layers of abstraction that normally sit between you and the hardware when you are working in a browser. Shaders are compiled in your browser and run on the metal of your GPU. 3D scenes and effects can require and make more use of that raw processing power than 2D scenes.

Modern desktop GPUs can have hundreds or even thousands of seperate processing units. The shader you write runs on all of them at the same time, each instance rendering a different pixel. The latest cards can have a peak theoretical performance of 5 teraflops - that means 5,000,000,000,000 (5 trillion) floating point operations every second.

(Wow!)

So, what on earth can you sensibly do with a trillion (from a mid-range GPU) calculations per second? Well, not that much, it turns out.

Firstly, you want to animate something, ideally at 60 frames per second for a smooth realistic experience. So now you have only 16 billion calculations - per frame that is.

Of course you want to fill a screen, for example FullHD's 1920x1080 pixels. So now our staggering raw teraflops performance has been cut down to a less-than-impressive 8000 instructions per pixel per frame.

If you are rendering a traditional 3D scene modelled with lots of vertices and textures, this is more than enough for very complex scenes.

But many of the interesting shader experiments are based on rendering a scene described mathematically in code using techniques like ray tracing shapes, ray marching distance fields , or path tracing light emitters.

For these techniques, you often need or want to sample a 3D-function describing the world hundreds of times in order to find surfaces, check shadows, reflections and ambient occlusion. If you do that just 100 times, then that function describing the world must evaluate in a mere 80 instructions, otherwise you've blown your total instruction budget and your frame rate will start to drop.

That's how to use up a trillion instructions per second rendering (real time) 3D.

On mobile devices, 20-40 gigaflops is currently more common, going up to 100 gigaflops soon. But that's still just 2% of the performance of those desktop power guzzling monsters!

WebGL came first to the desktop browsers, and despite being based on the mobile version of OpenGL, it's been slow to come to mobile. On iOS it's limited to ads only (???) with no sign of that changing. On Chrome for Android it's now in the latest versions, but hiding behind a user <a href="chrome://flags">flag</a> - not switched on by default.

When it finally arrives on mobile for real, just take that same wonderful looking 3D shader running 60FPS on your desktop, and run in on your mobile WebGL browser. The results will not be pretty. In the best case you'll get one frame per second. If you're unlucky, the device might lock-up or reset.

This is a bit of a dilemma, that the same technology can span devices with such a wide range of performance. It's also unfortunate that the people most interested in WebGL at the moment are probably the ones with huge GPUs on their desktops. The result is that "mobile-compatible" WebGL content is practically non-existent at the moment because it requires deliberate effort to target a "low" performance level.

In the meantime, while I'm sitting at my desk I'll carry on burning up those teraflops trying to create mini 3D worlds with shadertoy. After all, you can't save them up for later.